HTTP Cache Middleware¶

Sometime, for performance reason, caching avoid lots of compute made in services. Blacksmith comes with a middleware based on redis and that cache response using the Cache-Control HTTP header.

The caching middleware only cache response that have the public directive

in the Cache-Control response and cache it depending on the max-age

and the age of the response.

It also interpret the Vary response header to create distinct response

depending on the request headers.

It requires an extra dependency redis or aioredis installed using the following command.

# For async client

pip install blacksmith[http_cache_async]

# For sync client

pip install blacksmith[http_cache_sync]

Or using poetry

# For async client

poetry add blacksmith -E http_cache_async

# For sync client

poetry add blacksmith -E http_cache_sync

Usage using the async api¶

import asyncio

from redis import asyncio as aioredis

from blacksmith import (

AsyncClientFactory,

AsyncConsulDiscovery,

AsyncHTTPCacheMiddleware,

)

async def main():

cache = aioredis.from_url("redis://redis/0")

sd = AsyncConsulDiscovery()

cli = AsyncClientFactory(sd).add_middleware(AsyncHTTPCacheMiddleware(cache))

await cli.initialize()

asyncio.run(main())

Important

Using redis, the middleware MUST BE initialized.

To initialize middlewares, the method blacksmith.ClientFactory.initialize()

has to be called after instantiation.

Example using initializing in an ASGI service running with hypercorn.

import asyncio

from hypercorn.asyncio import serve

from hypercorn.config import Config

import blacksmith

from notif.views import app, cli

async def main():

blacksmith.scan("notif.resources")

config = Config()

config.bind = ["0.0.0.0:8000"]

await cli.initialize()

await serve(app, config)

if __name__ == "__main__":

asyncio.run(main())

Usage using the sync api¶

import redis

from blacksmith import SyncClientFactory, SyncConsulDiscovery, SyncHTTPCacheMiddleware

cache = redis.from_url("redis://redis/0")

sd = SyncConsulDiscovery()

cli = SyncClientFactory(sd).add_middleware(SyncHTTPCacheMiddleware(cache))

cli.initialize()

Combining caching and prometheus¶

Important

The order of the middleware is important.

GOOD¶

In the example above, prometheus will not count cached request:

1from redis import asyncio as aioredis

2

3cache = aioredis.from_url("redis://redis/0")

4sd = AsyncConsulDiscovery()

5metrics = PrometheusMetrics()

6cli = (

7 AsyncClientFactory(sd)

8 .add_middleware(AsyncHTTPCacheMiddleware(cache, metrics=metrics))

9 .add_middleware(AsyncPrometheusMiddleware(metrics))

10)

BAD¶

In the example above, prometheus will count cached request:

1from redis import asyncio as aioredis

2

3cache = aioredis.from_url("redis://redis/0")

4sd = AsyncConsulDiscovery()

5metrics = PrometheusMetrics()

6cli = (

7 AsyncClientFactory(sd)

8 .add_middleware(AsyncPrometheusMiddleware(metrics))

9 .add_middleware(AsyncHTTPCacheMiddleware(cache, metrics=metrics))

10)

Warning

By adding the cache after the prometheus middleware, the metrics

blacksmith_request_latency_seconds will mix the API response

from the cache and from APIs.

Full example of the http_cache¶

You will find an example using prometheus and the circuit breaker in the examples directory:

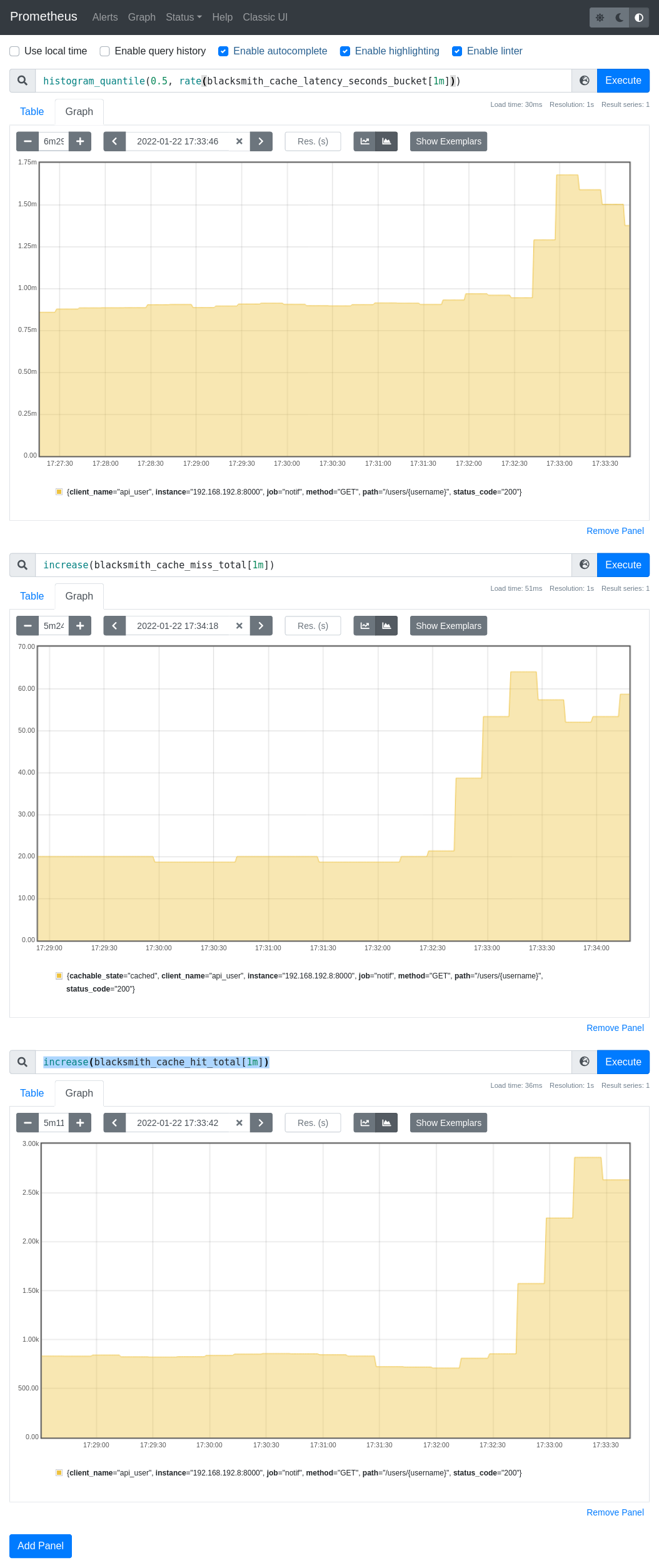

Example with metrics on http://prometheus.localhost/¶